Video Analysis

The video’s authenticity and the technology powering this drone can not be debunked.

If you doubt me, you can analyze the video yourself to verify its authenticity and uncover the drone's technology. I suggest that you read my witness testimony before analyzing, along with the biological effects of the drone in the next section. Despite using AI to quantify this analysis, you can independently verify all steps using any method of your choice. Once again, I have to use AI given that I am not a quantum physicist or computer scientist. However, I did not simply blindly copy and paste hallucinations or AI-slop responses. You don't have to be a genius to notice the anomalies present within the video. I am summarizing the anomalies in simple terms so you do not have to be an "expert" to understand. There is also a TLDR at the bottom of this page. No conventional propulsion systems or sensor artifacts can explain all aspects of this video. Although I do strongly suggest that you verify everything yourself, you are welcome to skip this section if you are already aware that this drone uses gravitic propulsion and you find technical details boring. This section is primarily intended for physicists, fellow nerds, and skeptics. The original video file and the hex data are at the bottom of the page, and the commands and results used are underlined in each applicable section. This analysis is not perfect, as no analysis method is perfect. There is no gyroscope or similar data that is extractable from the video, so analysis methods can not account for rolling shutter distortions and camera movement with 100% accuracy, especially given that the distortions are non-conventional and field-based. Allow for a small margin of error. What matters most is my testimony, the authenticity of this video, all phenomena that perfectly match gravitic propulsion, and Matthew Livelsberger's testimony. Nearly all of the anomalies I'm about to show you have no explanation that follows the laws of physics or can be explained by normal sensor behavior. The anomalies also make perfect sense when you consider that these drones are built to be undetectable by air defense systems. If you are not confident already, you should be confident that this drone is distorting the space, time, and light around it after reading this analysis. I will also repeat and expand upon some aspects mentioned in the last section.

Verifying Metadata/Camera roll check

Can't fake this.

Proving that this is an actual iPhone video, using a live iPhone screen recording of the original video file, messages from that night, emails sent to Sam Shoemate, a live IMEI check, a synced camera capture of my phone and computer, all overlayed over an OBS screen recording of my computer performing a metadata check to prove the authenticity of this video. You can't fake this. Use ExifTool, MediaInfo, exif.tools, or any other method to extract and verify the full metadata. Use any hex editor to validate the hex of the original video file or reconstruct the video from the hex data. Use any method you can possibly think of to confirm the video's authenticity; this is the most important step.

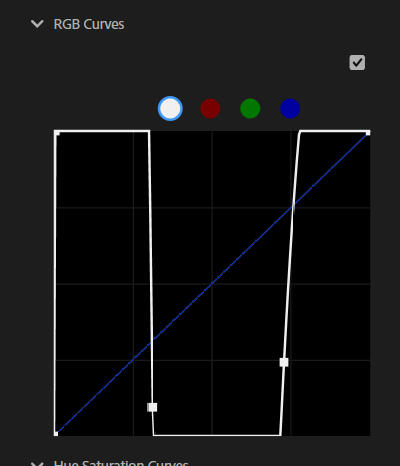

The drone's immediate radial field

Visible radial distortion area in the drone's immediate area throughout the video

When you increase the luminance of the video, it becomes clear that a radial field of distorting light surrounds the drone throughout its flight. In Alcubierre's original 1994 paper, a thin shell of negative-energy density is required to create a warp bubble around a craft. This thin bubble likely protects the craft from the extreme gravitational gradient, which would destroy the craft if left unprotected.

the Drone stays the same size on video as it moves closer to the camera

This was observed by me in person and corroborated through optical flow, the camera malfunctioning, and your eyes, all of which show motion and distortions in the scene, but not a proportional scaling of the drone as expected in normal perspective. The drone is not just travelling laterally relative to the camera as depicted in the video, which is confirmed by my visual analysis and the sensor's hard reset as it enters the drone's immediate distortion field.

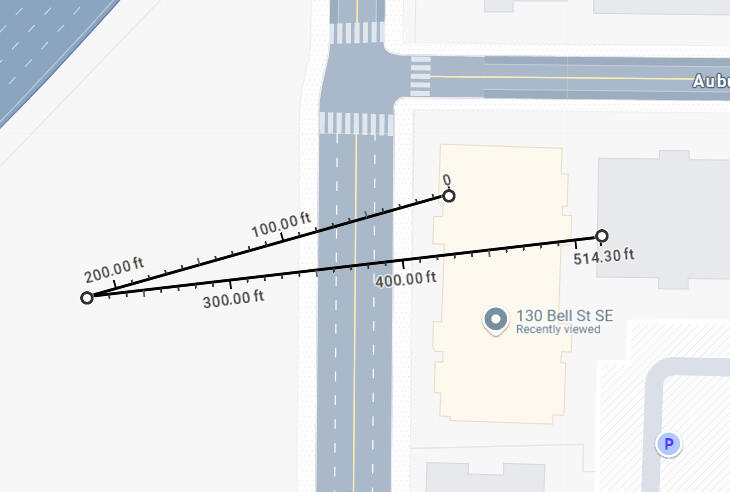

The point at the end of the 200ft line is the drone's approximate location when the video starts. When the ISP returns a blank frame at the 5.5s mark, the drone is flying directly over the apartment, ~10-20 feet above the ceiling. The flyover is marked where the 514ft line intersects the apartment building. Again, I observed the drone flying over the apartment with my own eyes. As I mentioned in text messages immediately after the event, which I show later, the drone was much closer than it appears on video. Once again, it is common sense that if you are filming an object as it moves closer to you, it should grow. Despite the drone flying around 200 feet from the start to the end of the video and descending in altitude, it never increases in size on video, even when the zoom remains constant. Given the drone’s propulsion distorts spacetime, it redirects light rays around itself (akin to gravitational lensing), causing it to appear at a fixed size regardless of distance.The field creates a localized warping of geometry, causing light paths from its edges to warp nonlinearly, mimicking a fixed focal length. This observation proves gravitational field influence, as no known inertial propulsion method can maintain a constant scale during forward motion.

Luminance Suppression Near the drone

The horizontal zone near the drone consistently shows the lowest luminance in every frame where the drone is the subject

Luminance lowered

RGB values below [30,30,30]

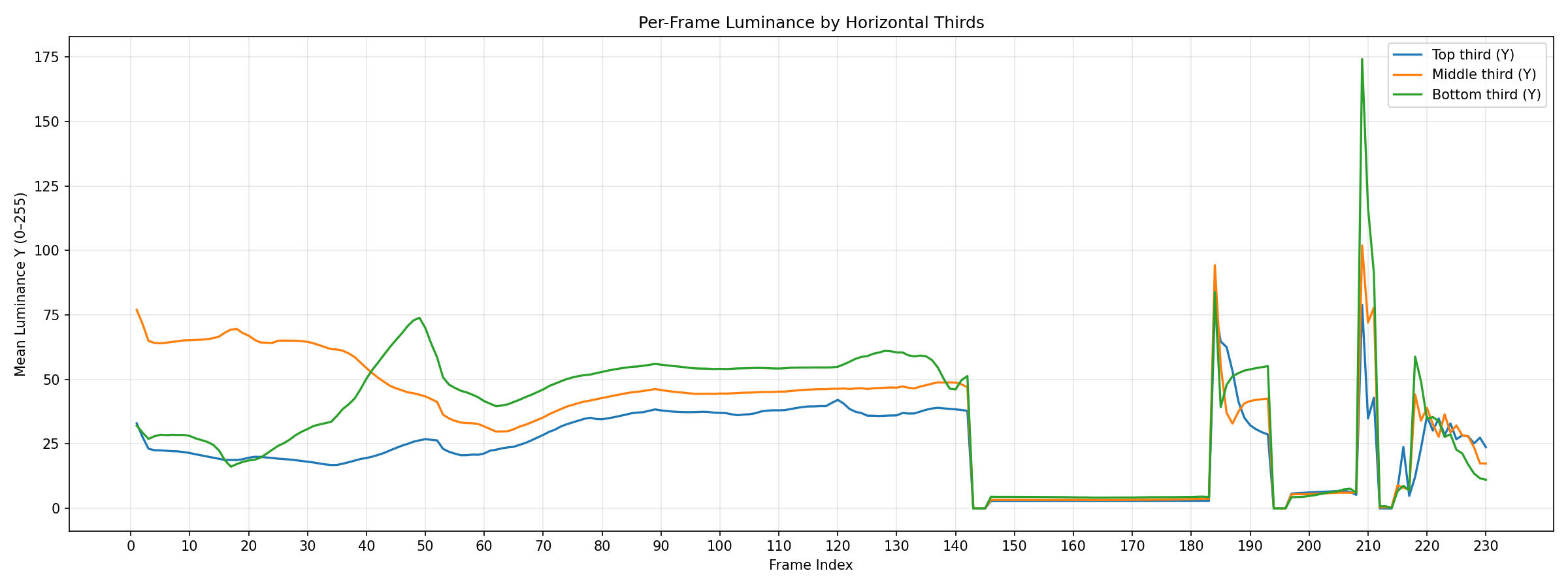

Comparing the luminance of each horizontal third of the video

When you decrease the luminance of the video, a dark horizontal zone near the drone becomes apparent. The field extending horizontally around the drone alters the intensity of the surrounding light, suppressing the luminance detected by the iPhone camera. A warp bubble interacting with space near the drone is redirecting the path of light. Such suppression is consistent with gravitational field zones affecting the wavelength and direction of the incoming light. The darkest pixels behind the drone's path perfectly match how the gravitic propulsion drone diverts the light behind it. When the phone is in the drone's blueshift zone, the luminance abnormally spikes to levels previously unseen. These spikes correlate with the iPhone frame timing shifts and other distortions observed later in this analysis, providing further evidence of the drone field's photon manipulation.

Redshift and blueshift zone after adjusted color scopes

Another example of how the drone affects the surrounding light

The drone causes an extended redshift and blueshift zone in its surrounding area. The redshift in the front and the blueshift behind also perfectly match the drone's central redshift beginning in its direction of travel, and my documented time dilation, which I provide evidence for on the next page.

Keying (removing) red and blue from the video.

When you key out the blue, the blueshifted light in the drone's trail becomes obvious, further proving that the drone compresses spacetime behind it.

This is when the "Color Key" effect is applied to the red in the video. As you can see, there is a denser concentration of red directly in front of the drone.

Raising luminance reveals the transparent middle of the drone

Further proves the transparent nature of the drone and suppressed light caused by the drone

As I mentioned in my witness testimony, the drone had a transparent middle. At the start of the video, the drone faces away from the camera, then rotates toward the camera as it travels closer. Luckily, you don't have to just take my word for the drone's in-person appearance. This rotation and the transparent nature of the drone can be confirmed by altering the color values of the first few frames while the drone is facing in the opposite direction. As you can see above, two distinct red patterns are visible at the top and bottom of the drone. During the following few frames, the redshift begins, and the bottom red light of the drone disappears while the top red light stays visible. This parallels the bottom light being the last light to recover later in the video when the redshift starts to angle away from the camera around frame 100.

True Black Pixels

Blue pixels represent true black values (RGB = 0,0,0)

True black pixels enlarged x5.

True black pixels

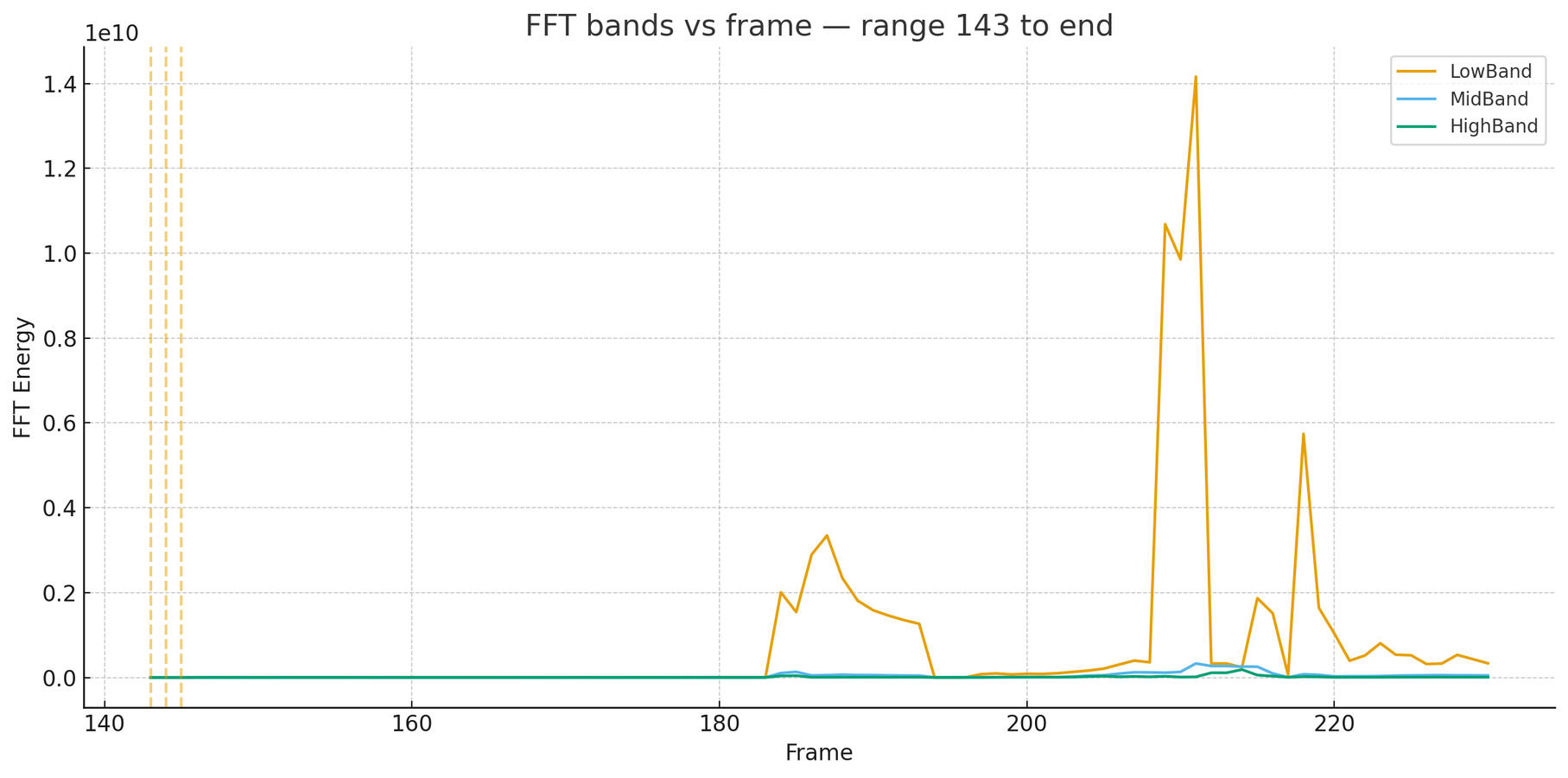

These values reflect pixels where the sensor outputs a blank value due to unreliable/highly distorted pixels. The second true black value is "coincidentally" present in the center of the drone. As the camera is closest to the drone's blueshift zone on frame 143, the entire frame is true black. This occurs because the camera's ISP fails to capture a coherent geometry of the photons in front of the window that are actively being warped by the drone, causing the sensor to default to a true black output. When the sensor recovers after these black frames, light hitting the sensor remains distorted, so the ISP still returns true black values. This occurs primarily near the drone's last location on the frame, providing even more evidence for the drone being the cause of this phenomenon. No known propellerless or wingless propulsion technology can cause any of these phenomena, especially when shielded by a roof and a window.

Increasing Luminance reveals hidden color in black pixels orbiting the drone

Reveals suppressed color in the raw video. This phenomenon is strongest near the drone, proving that the field is strongest near the drone.

The orbiting pixel region suggests a gravitational gradient surrounding the drone. This orbit may reflect field-induced light path distortion, creating lensing rings that shift color based on position and depth in the field. This red/blue pattern is consistent with the visible gravitational redshift and blueshift in the video. Once again, remember that in person, every color shift was invisible to the naked eye, and the color, shape, and size of the drone remained constant throughout its entire flight. These black pixels near the drone are sensor responses to the field emitted by the drone. Gravitic propulsion produces subtle, coherent distortions in the electromagnetic field, made visible in CMOS sensor arrays, as shown in the video data.

Windowsill light warping

Can not be caused any other propulsion system

Let's revisit this clear example of spacetime field lensing. Once again, the camera remained stationary during this period. There is no sound depicting movement in the foreground, yet you can hear my voice. Along with me assuring you that I did not move the blinds, you can conclude this on your own. The drone remains in roughly the same vertical position, yet the light converges toward the drone. As the drone passes directly above the apartment, the front of the field pulls on the environment below and the back pushes on the environment above, which is evident by the movement of the blind's light but not the blinds themself. This movement perfectly matches a spacetime compression zone at the back of the craft and an expansion zone at the front.

Light convergence and expansion/compression As the drone rotates and flies directly overhead

"Impossible" Light Distortion

Again, the drone was about 10-20 feet above the roof as it approached the camera during this period of the video. As the drone angles its compression field towards the camera before the sensor blackout, the drone is positioned at the very top left of the frame. You might initially think that this placement was luck, until you consider that the drone is actively expanding spacetime in front of it and compressing it behind it, and how this frame shift occurs as the drone rotates its field. Given that its horizontal-dominant spacetime compression field is now facing the camera, and the expansion field is now facing the light to the left of the drone, it makes perfect sense that the drone is positioned at the very top left of the frame. As the field is rotating, the drone expands the light above its top light away from the sensor, and pulls the light below it towards itself, rendering it impossible to capture the light above the drone from my camera's angle. This is why the iPhone's image stabilization pushes the entire frame upward as the drone rotates and the light in the scene shifts relative to the drone. This movement is also not captured when analyzed with various optical flow methods, which are designed to track pixel movement. This implies a stabilization anomaly due to light distortion rather than actual motion. More substantial distortion near the drone during this period can also be verified with an anisotropy analysis on these frames. This stronger local movement implies field-based warping caused by the drone. The drone manipulates the geometry of light, not just air or inertia. The drone's low luminance zone also rotates at roughly the same angle as the field's rotation, providing further evidence for the drone affecting the surrounding light.Field realignment + field-centered frame realignment + foreground light converging + more = Localized spacetime distortion.Quick question: Have you ever seen a drone, or any object, cause this phenomenon?

More Examples of Foreground and Background Light Manipualtion

The warping of the drone's light perfectly match the behavior of an expansion and compression zone, consistently throughout the video, even as the drone rotates.

Star overlaying windowsill

Frames 135–138: The light from a distant star overlays and briefly dominates the upward-pulled light from the windowsill

The drone’s field warps the paths of incoming light, making a background light source (the star) appear in front of a foreground object (the windowsill). This resembles gravitational lensing, where massive objects bend light from background sources. The field may also alter photon arrival timing, momentarily intensifying or misplacing photons from the star relative to the windowsill’s light, causing the overlay and subsequent disappearance. Combined with other anomalies, this star overlay is not explainable by camera error, parallax, or encoding. However, these anomalies do align with predictions of how gravitic propulsion can distort light trajectories and apparent spatial relationships in a scene.

Nonlinear Motion Vectors Between the Drone and Star

During this period, my camera is relatively stationary. Although the drone remains in roughly the same spot in the frame, the rest of the frame is moving which is made apparent when examining the star. This phenomenon is most evident in frames 62-80. This is, once again, consistent with gravitational lensing and the drone's compression and expansion of light.

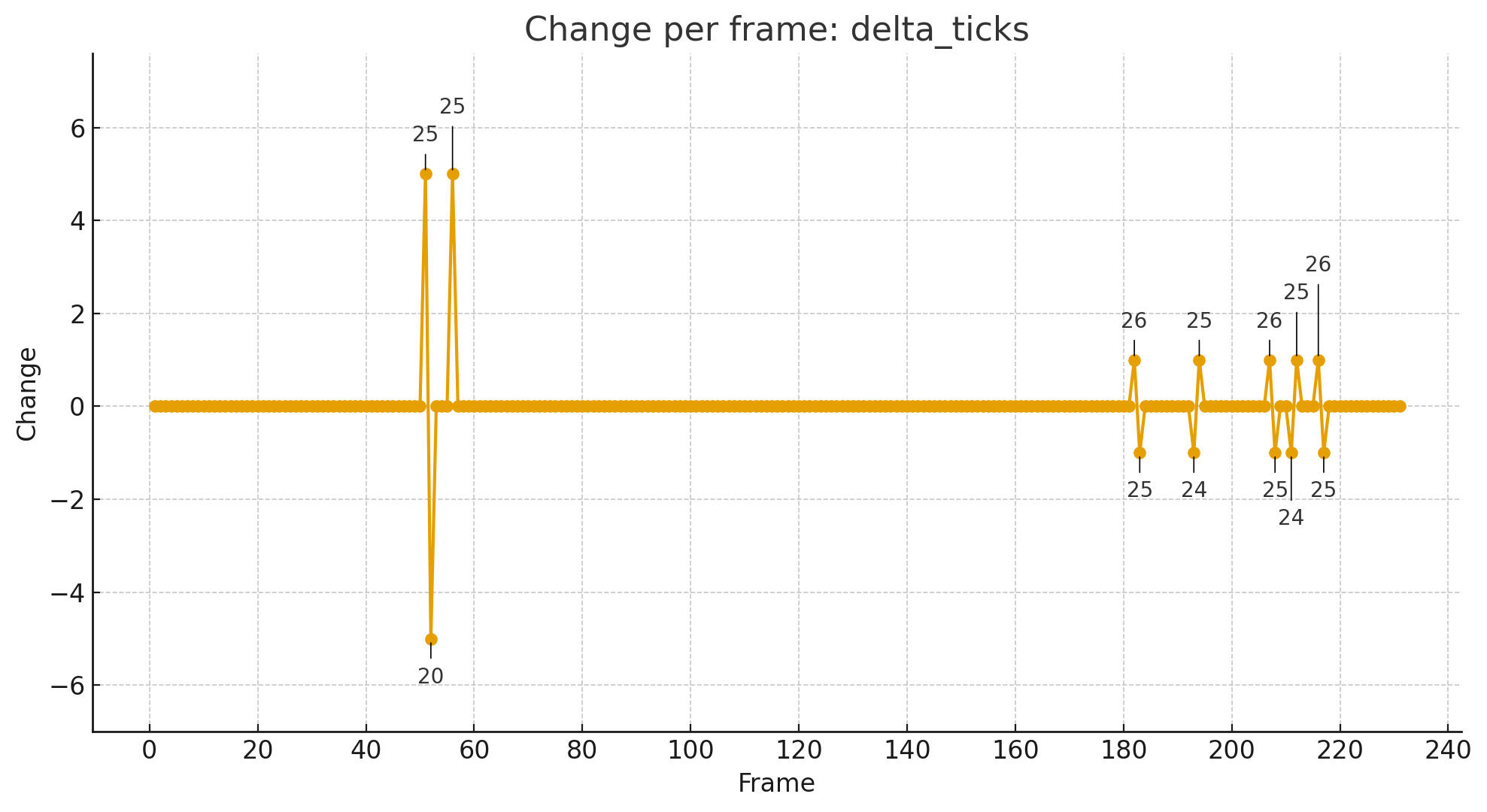

stts & timed metadata ticks/Blueshift zone

STTS Ticks

Midtones Raised

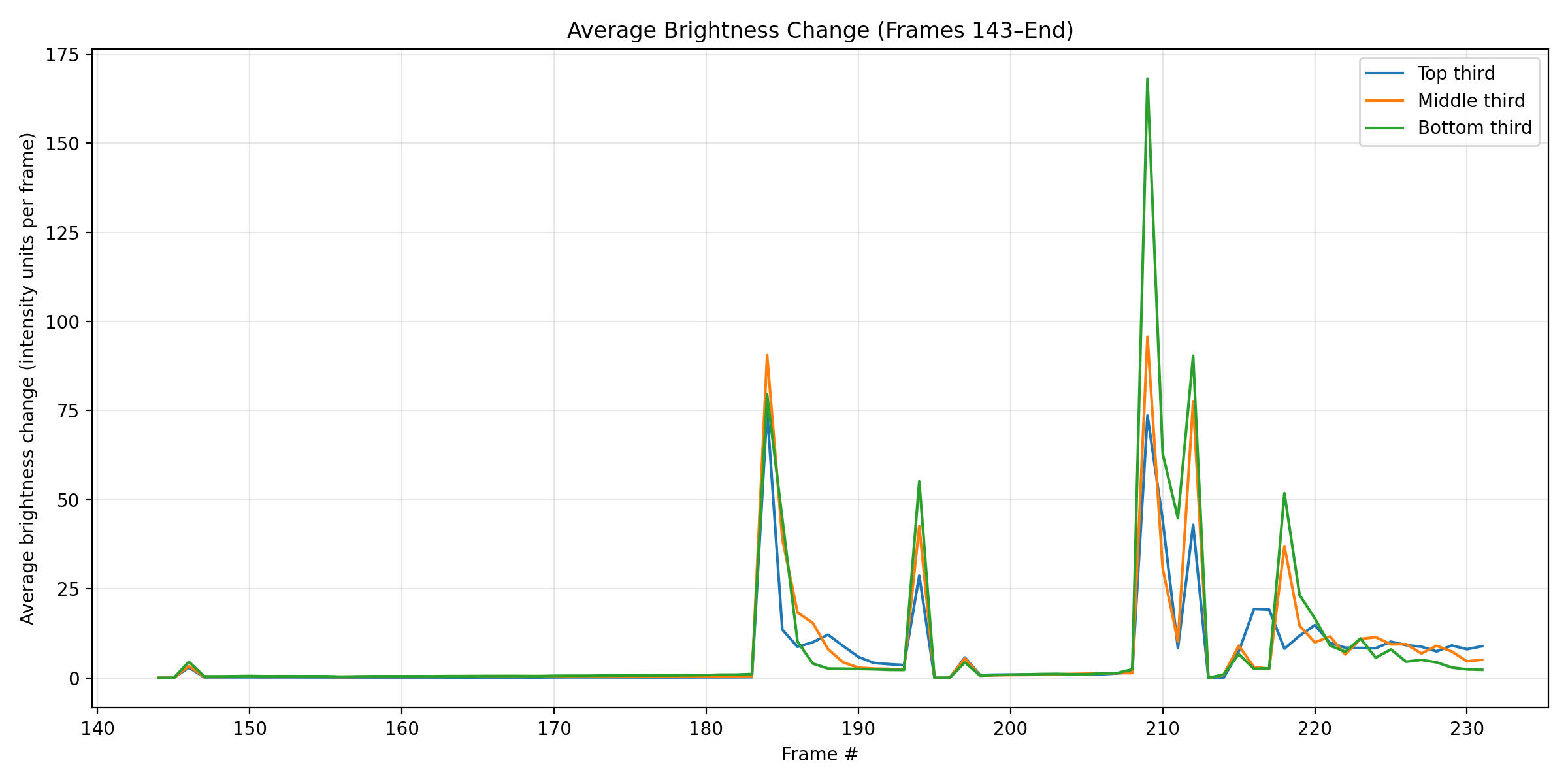

The reason for the video alternating between bright and dark as the drone flies overhead can be revealed by the video's luminance profile and by calculating the time it took to capture each frame by examining the "Time-to-Sample" (STTS) tick switches present in the hex data. iPhones primarily don’t oscillate STTS ticks unless the camera detects a changing light environment. When tick durations increase, the camera determines that there is not enough light in the scene. When tick durations decrease, the camera decides that there is too much light in the scene. From frame 144 to the STTS tick switch at frame 184, there are no luminance changes and no tick switches, even when the star is introduced on frame 173. However, lifting the midtones of these sections reveals that the sensor was still capturing light during this period. Now, the tick switch at 183 could be explained by the star entering the scene and the camera adjusting to the new light source. However, that explanation fails to explain why these tick switches and the light captured by the camera fluctuate continuously and dramatically while the star's light remains constant. While the camera is in the drone's blueshift zone, the RGB values each fluctuate near .001 when analyzed in Premiere Pro, which is the lowest possible value an iPhone camera can record. .001 values are typically only recorded when the light in a scene is extremely dark. This suggests the gravitic field is still active and intermittently interacting with the camera sensor, as it constantly changes the path of light in the area even after it flies directly overhead.

Along with this anomaly, the light from the windowsill continues to warp and converge toward the drone throughout this distortion period. The windowsill's warp is visibly much more dramatic than the star's, because the light from the windowsill is directly inside the drone's warp bubble. The windowsill's warp persists even while the camera overlays the star correctly. This drastically weakens the argument for any conventional explanations. If spacetime is oscillating slightly, the arrival time of photons changes frame-to-frame, which the camera firmware might interpret as frame rate instability, resulting in tick switches. Until the field fully dissipates, light in the foreground continues to warp unpredictably.

Altogether, the data shows that the camera is behaving as if there is a fluctuating light source in the area, despite none being present. This, combined with the STTS tick switch pattern, confirms that the drone is altering the frequency, intensity, and path of the light being recorded. These tick switches and brightness oscillations immediately stop as soon as I stop recording the warped light outside the window, when I angle my camera down and capture the drone operators' car. This oscillation in light intensity also matches the multiple groups of distorted light converging towards the drone while it travels toward the camera, as illustrated in the SSIM map later in this analysis. When you combine this with the brightness change per pixel per frame analysis shown later in the section, you can definitively conclude that the drone is actively manipulating the light captured by the camera.

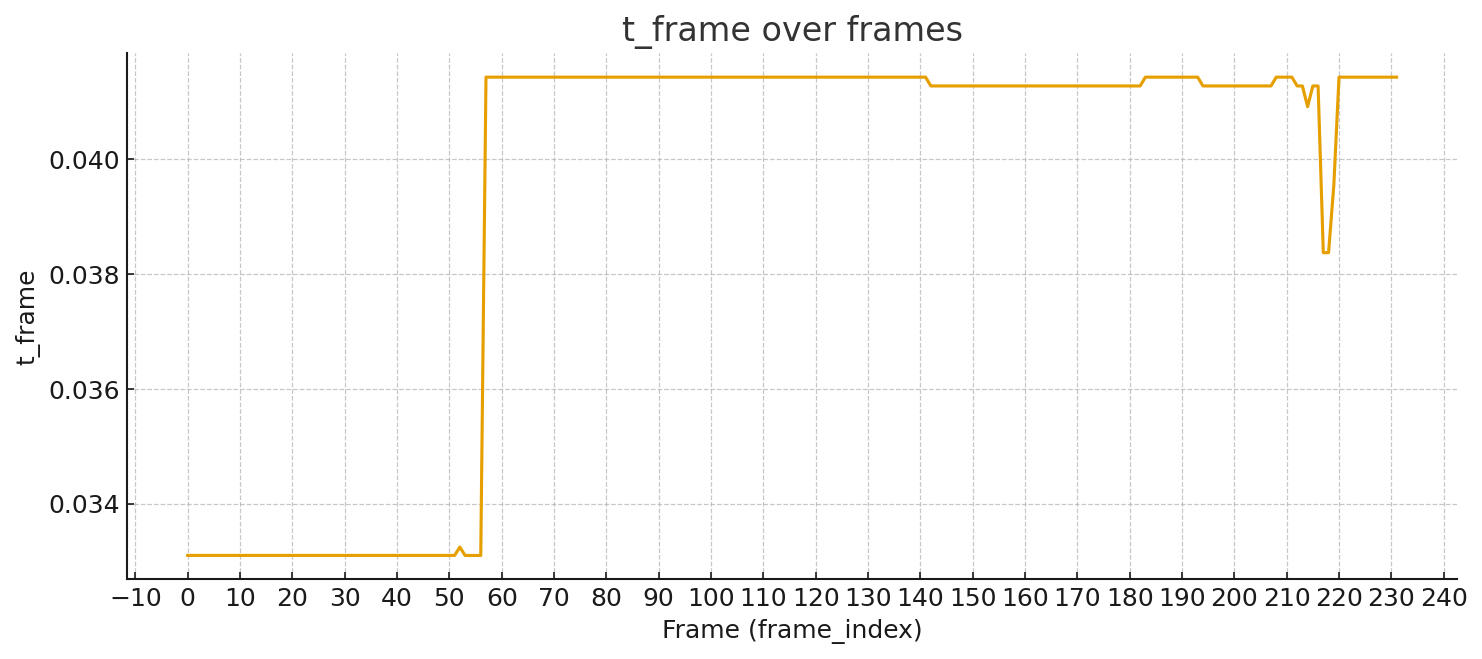

Metadata Timestamps

The t_frame shows the frame-to-frame encoding timing that was stored in the metadata, directly from the camera pipeline. This reveals in-depth timing changes that the STTS container may smooth over when the frames were output, providing a more in-depth look into how the camera reacted to the warped scenery. These changes typically occur due to rapid luminance swings, impulse/vibrations that disrupt OIS/EIS, low light, and anomalous rolling-shutter geometry that forces encoder reordering changes. Once again, timing anomalies in the data appear as the drone flies directly overhead on frame 143, and continue until I angle the camera down.

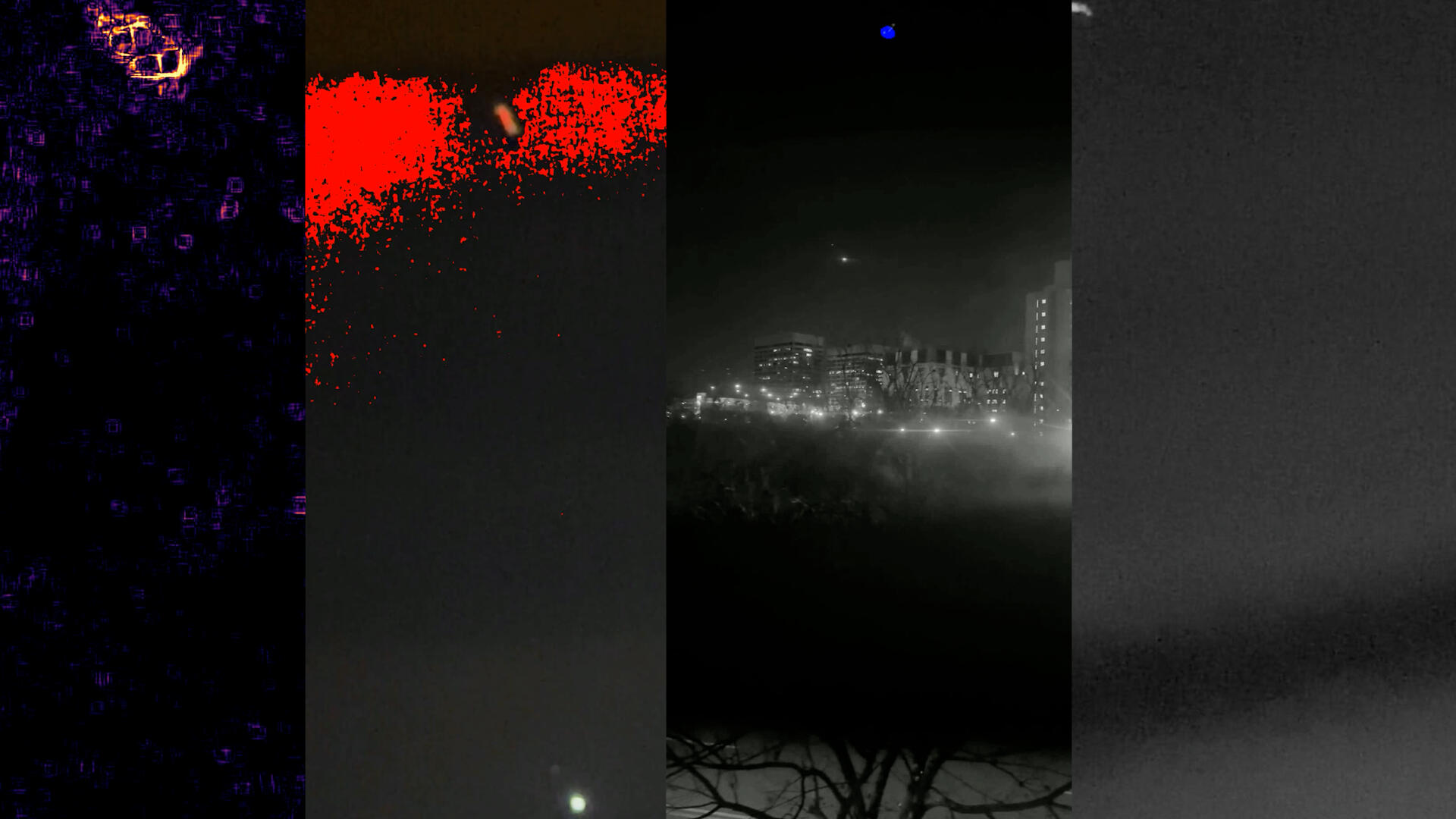

Optical Flow Analysis

The algorithm compares two consecutive frames. For each pixel, it calculates a vector that models how far the pixel’s content moved between frames. By mapping these magnitudes into a color-coded heatmap, you can see where in the frame the motion or distortion is strongest, and whether the motion is localized or global. This outputs where the camera detects the most visual change.The colors represent the magnitude of motion (pixel displacement) between frames, with each color mapping to a specific range of motion strength.Blue / Dark Blue – Low magnitude motion

Very small pixel displacement from frame to frame. These areas are essentially stable in the scene.Green – Medium magnitude motion

Noticeable movement, but not extreme.

Yellow / Orange – High magnitude motion

Pixels are shifting significantly.

Red – Highest magnitude motion

Areas where the most significant frame-to-frame pixel displacement is detected.Notice how in the frame before the sensor blackout, the variation between pixels is at its lowest throughout the entire video. This further supports the notion of a spatial zone where incoming photons are stretched in time and displaced less between frames, reducing visible motion.

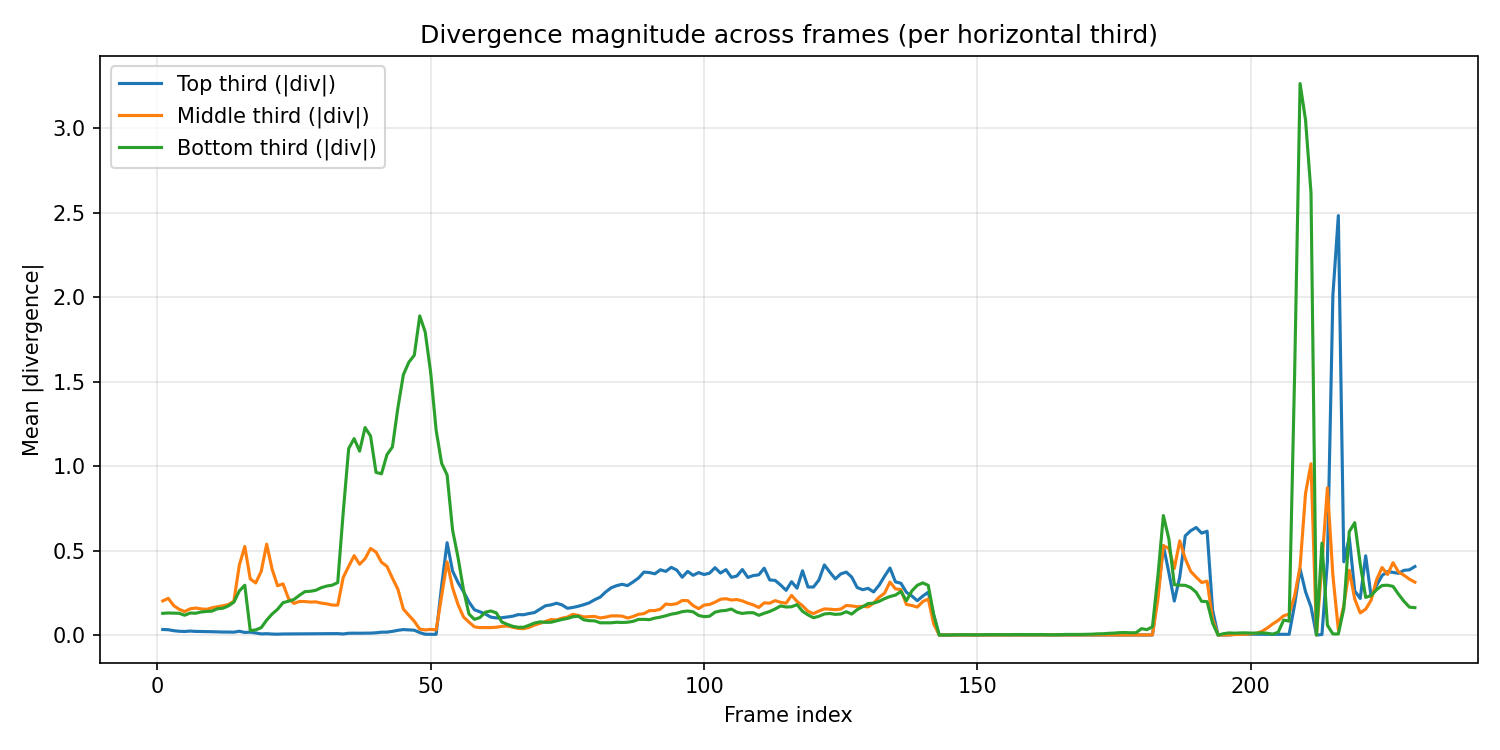

Pixel Divergence

Divergence measures how much the optical flow vectors radiate out from or converge into a region. In other words, local expansion or compression of pixel motion. Increasing values indicate spreading motion, while declining values indicate collapsing motion. The divergence values support a horizontal field that declines vertically during the drone's redshift phase. Pixel divergence shows invisible forces at work, revealing that areas around the drone are rapidly distorting. This data strongly supports the presence of a dynamic, fluctuating energy field that influences the environment beyond the drone and produces nonlinear and oscillating divergence patterns.The data features bursts of strong divergence, followed by suppressed periods, a behavior consistent with gravitational field modulation, not conventional jet or rotor propulsion.It supports the notion of a non-visual field altering pixel movement patterns. Or, it's evidence of standard computer vision metrics failing in the presence of extreme velocity, relativistic effects, or sensor interference. Either way, it's distortion caused by a wingless and propellerless light-manipulating drone as it passes overhead, so does the precise reasoning truly change any of the fundamentals?

Divergence map over the original video (Legend below)

Divergence Map

Black / Deep Purple: Near 0 | No detectable local expansion or compression. Pixel motion is stable.

Dark Red: Low–Moderate | Small localized motion divergence. Subtle spreading/contracting activity.

Orange / Amber: Moderate–High | Significant local divergence. Pixels are moving apart or together rapidly.

Yellow / White: Near threshold or higher | Extreme apparent divergence.

Before the camera zooms, the divergence in the top third of the frame is extremely stable as the drone's field is not yet visible. The field's effects not being visible until magnified is consistent with a localized field. After the camera zoom completes and the redshift onsets, the most extreme region surrounds the drone, with the most significant spike near the drone occurring when the drone is closest to the camera. There is also a notable spike in the windowsill's warped light while the camera is in the blueshift zone.

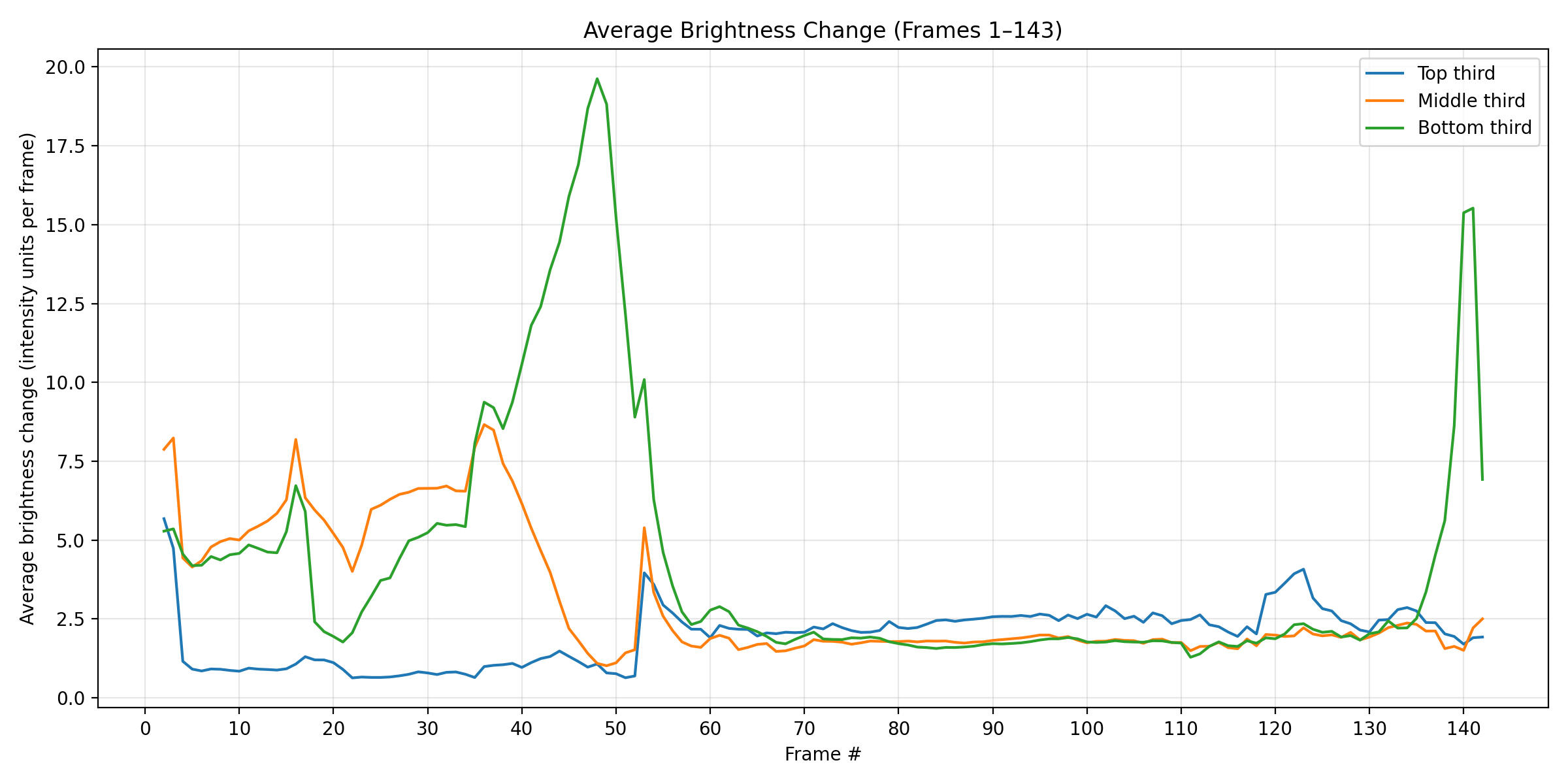

brightness change per pixel per frame Comparison

Calculating brightness changes to determine how rapidly the light intensity changes over time in different regions of the video

Brightness Changes & blueshift/redshift zone Correlation

At the start of the video, when the lights from the buildings were present and the camera was in the drone's extended and barely visible blueshift field, the top third of the frame was the most stable. As the drone rotates, its field of influence becomes clearly visible, resulting in higher brightness changes in the drone's immediate horizontal area. Once again, the drone starts rotating towards the camera as I am zooming in from frames 35-57, which also explains the brief spike in the bottom thirds during this section. The spike in the top section around frame 80 reflects the drone pulling light into the top of the frame. The spike at the bottom of the frame around frame 140 correlates with the drone pulling the light from the windowsill towards it. When the camera enters the drone's blueshift zone, the brightness changes in each section exponentially increase. These speeds are so anomalous that Premiere Pro's optical flow algorithm can not effectively determine the motion. As I mentioned in the "Impossible Aspects" section, analyze the video at around 20% speed and apply optical flow time interpolation using After Effects, Premiere Pro, or OpenCV to observe this phenomenon. This is consistent with a gravitic propulsion mechanism manipulating the surrounding spacetime geometry rather than simply moving through it, causing non-intuitive pixel motion, non-linear optical flow, and spectral distortion.

Structural Similarity Index Measure (SSIm)

Heatmap Legend

Full Heatmap

Cyan/Green Values

Red/Yellow Values

Reveals consistent low-similarity zones near the drone and moving distortions sweeping from the bottom right to the top left, correlating with the windowsill's light distortion angle and drone proximity. Cyan/green zones pull toward the drone while the redshift is active, while the horizontal area near the drone is primarily red/yellow. This pattern mimics gravitational lensing, where nearby photons and visual data distort more as they approach a gravitational field well. The color-matched disruption traveling toward the drone mirrors what you'd expect in gravitational pull patterns on both EM radiation and local objects. The similarity map shows real-world background light bending, which is once again consistent with gravitational lensing. The fact that this convergence is the same shape and angle of the windowsill's distortion when the camera is in the blueshift zone is very telling.

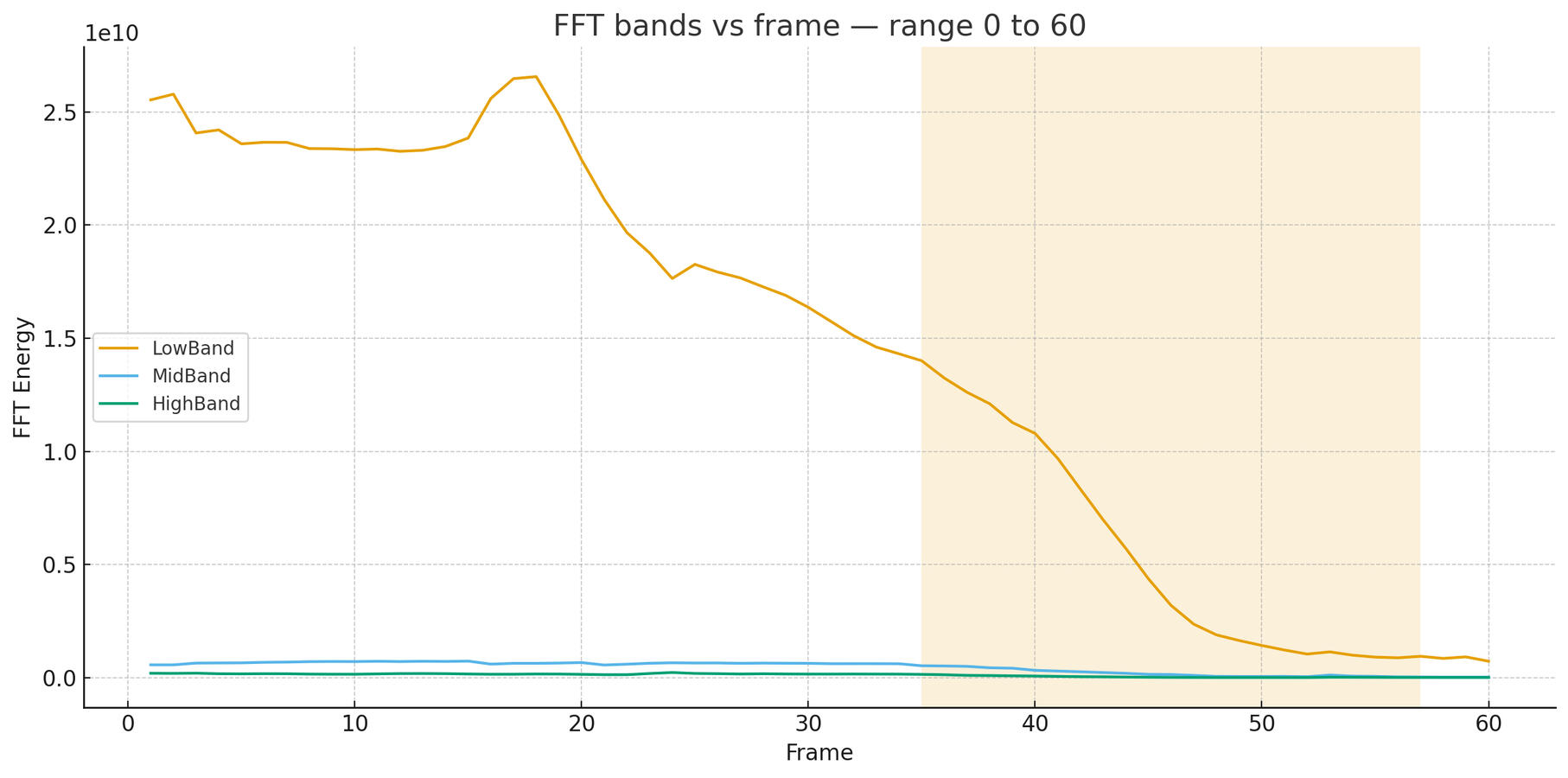

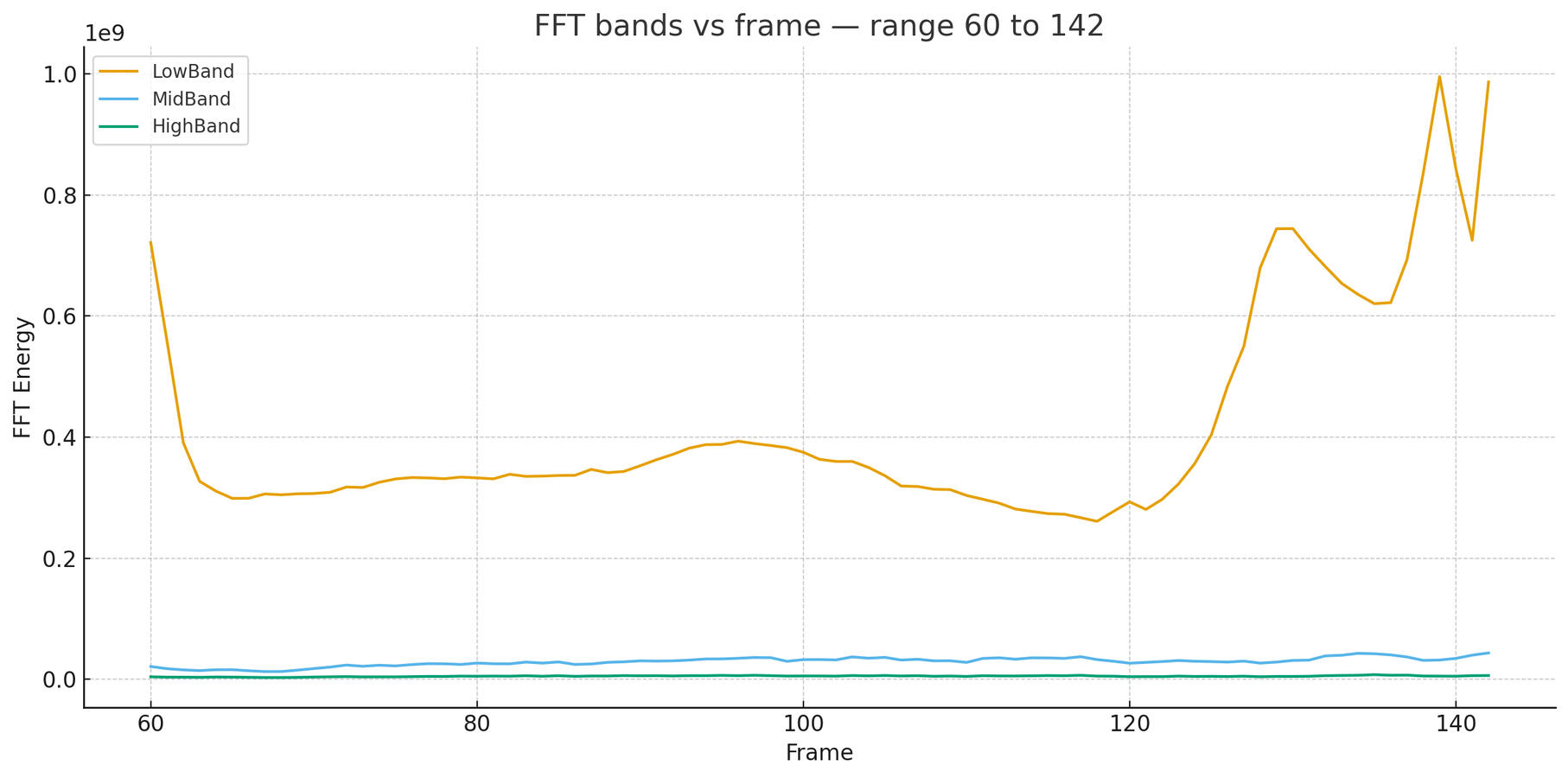

fast fourier transform (FFT)

The FFT energy is high at the start of the video due to the wide camera angle and lit buildings in the background. As the camera zooms, the FFT drops dramatically. A drop in FFT data is common when an iPhone switches lenses while a camera zooms; however, the plateau continues long after the zoom switches, which is abnormal. The FFT drop during the zoom is also abnormally high when compared to a conventional video. When the camera enters the drone's blueshift zone, all energy bands drop before spiking to the highest recorded values after the zoom (frame 57). The FFT band energy signifies the pipeline struggling and re-locking under unusual conditions, along with muted signal strength while the drone's field is being recorded.

universal Luminance flattening as the camera films the Redshift

Inferno Colormap Legend:The 'inferno' colormap ranges from dark purple to yellow-white.

The darker areas indicate low magnitude (no significant edge), while brighter areas represent areas with

high magnitude (strong edges or distortion).Color Interpretation:Dark Purple/Black: Areas with low luminance change or little to no edge.

These are the regions in the frame where the content is relatively unchanged (low edge detection).Yellow/White: Areas with high luminance change, representing strong edges or distortion.

These could correspond to motion in the frame, sharp boundaries, or high-contrast transitions.

By analyzing the 10 consecutive frames with the lowest average luminance before the camera enters the drone’s immediate field at frame 143, you will find that the luminance reaches its minimum precisely when the redshift is directed toward the camera. This aligns perfectly with the well-documented phenomenon of luminance suppression observed when filming within a gravitational redshift zone.

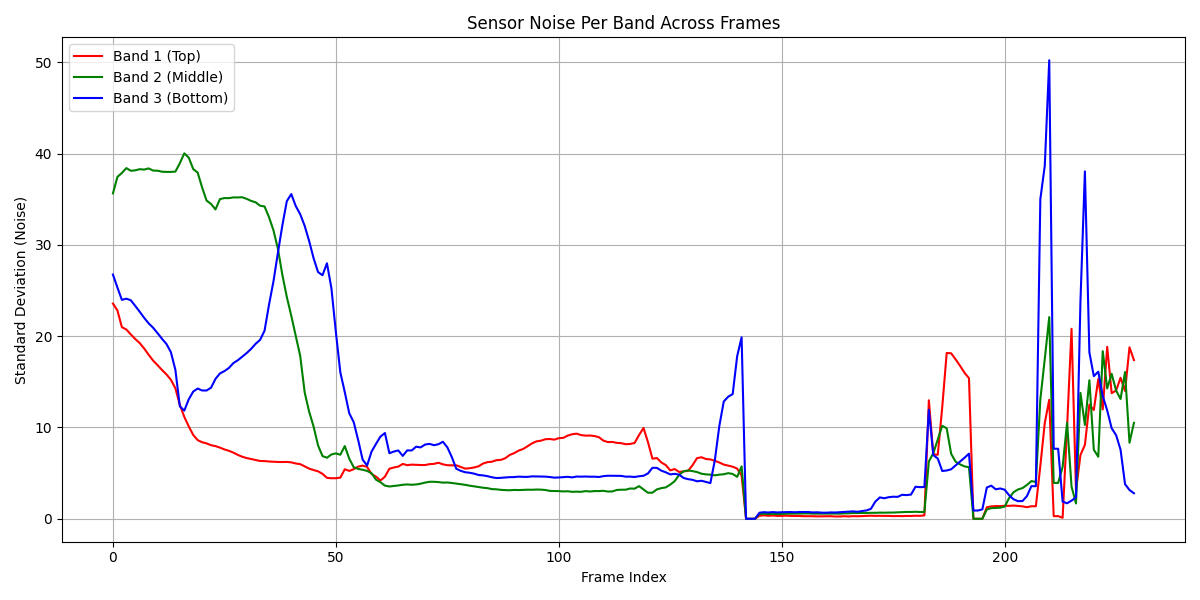

Sensor Noise

Along with the highest brightness changes, the drone's box consistently shows the highest sensor noise during the redshift phase. If a conventional cause were responsible, the sensor noise would not spike as it does after frame 143. This further proves the anomalies are field-based and can not be explained by normal camera behavior.

TLDR: The drone causes a redshift at its center and in the light in front of its travel, a blueshift of the light behind it, and a radial disappearance of light near it, despite only featuring a warped middle in person with no visible wavelength changes. The drone is proven to be intially transparent on video before its acceleration, has no wings or propellers, completes a ~180-degree horizontal and ~135-degree vertical rotation on camera despite no visible exhaust, warps light unevenly, distorting the light closest to its core the most, changes shape and size on camera despite being a fixed shape and size in real life, and never enlarges on camera despite flying ~200 feet closer to the camera and descending in altitude. When the drone's redshifted center points at the camera, its horizontal field of influence becomes clear through a visual and quantitative analysis of the video using multiple methods. The horizontal area near the drone consistently features the most anomalous luminance characteristics and pixel behavior throughout its entire flight. As the field gets closer to the camera and rotates, all of the light in the frame warps, identically matching the theorized directional movement of a spacetime expansion and compression zone. As it flies directly overhead and the camera enters its blueshift zone, it causes extreme light warping in the same shape as the previously seen warped light, an Image Signal Processing system failure, anomalous pixel brightness changes and directional movement, and light-based FPS oscillations. This can all be independently quantified and verified, along with other anomalies. All anomalies align with my witness testimony, which was documented before conducting this forensic analysis. No known physical phenomenon or propulsion system can describe all of these anomalies together...besides gravitic propulsion. Now, onto the biological effects.

Immediate Conscious & Biological Effects

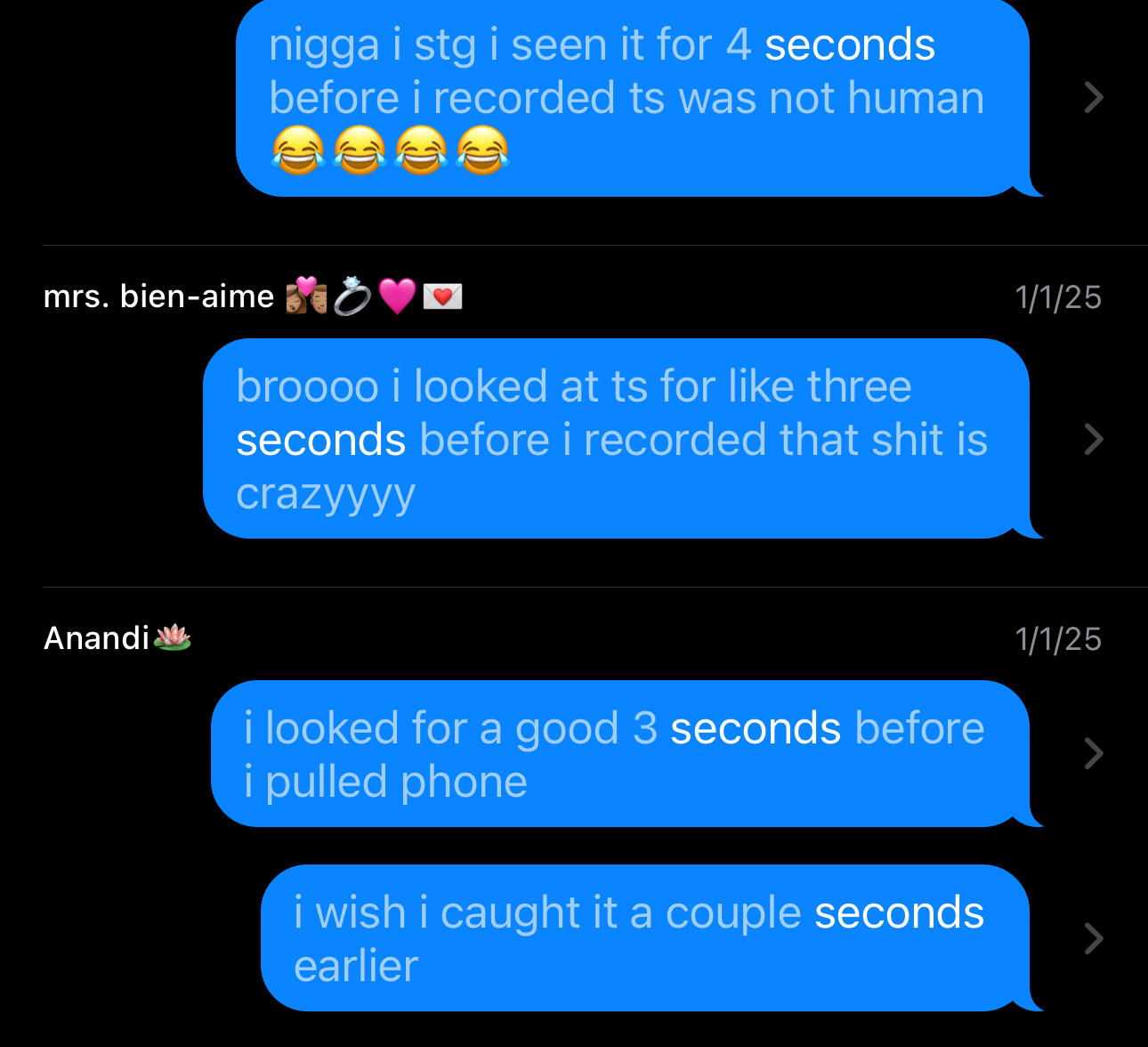

Time Dilation

The conscious effect that undeniably proves what I witnessed was a gravitic propulsion drone is the altered time perception I experienced. As you might have noticed in my text messages, I reported only seeing the drone for about 3 to 4 seconds before the video began. However, if you watch my flight path recreation, you will notice that its flight was much longer than 3 to 4 seconds. Immediately after the encounter, I continuously stated that I observed the drone for 5 to 10 seconds at most before I started the video. It wasn't until weeks later that I finally realized that I witnessed the drone as it was flying near the intersection for around 30 seconds. This delayed error in time perception is due to the drone's time distortion and memory encoding errors caused by the drone's field. In actuality, before the video starts, I am 100% sure that I witnessed the drone for about 30 to 40 seconds. Along with the length of the video, the only reason I’m able to estimate an approximate length of the encounter is because the drone moved at a constant speed throughout its whole flight, and I vividly remember the majority of its movements. As the drone flew overhead, I vividly remember the black car traveling down the street around five seconds after I stopped recording the video. My perception of time was much slower than what actually occurred, as you can see the car travelling down the street as the video is ending. I didn't even notice that I filmed the drone operators' car until a few weeks later, as I assumed it was impossible that I could have filmed it, due to my warped perception of time. Time feeling faster in front of the drone and slower behind the drone is consistent with the redshift and blueshift locations of the drone on the video. No technology except for gravitic propulsion can cause this.

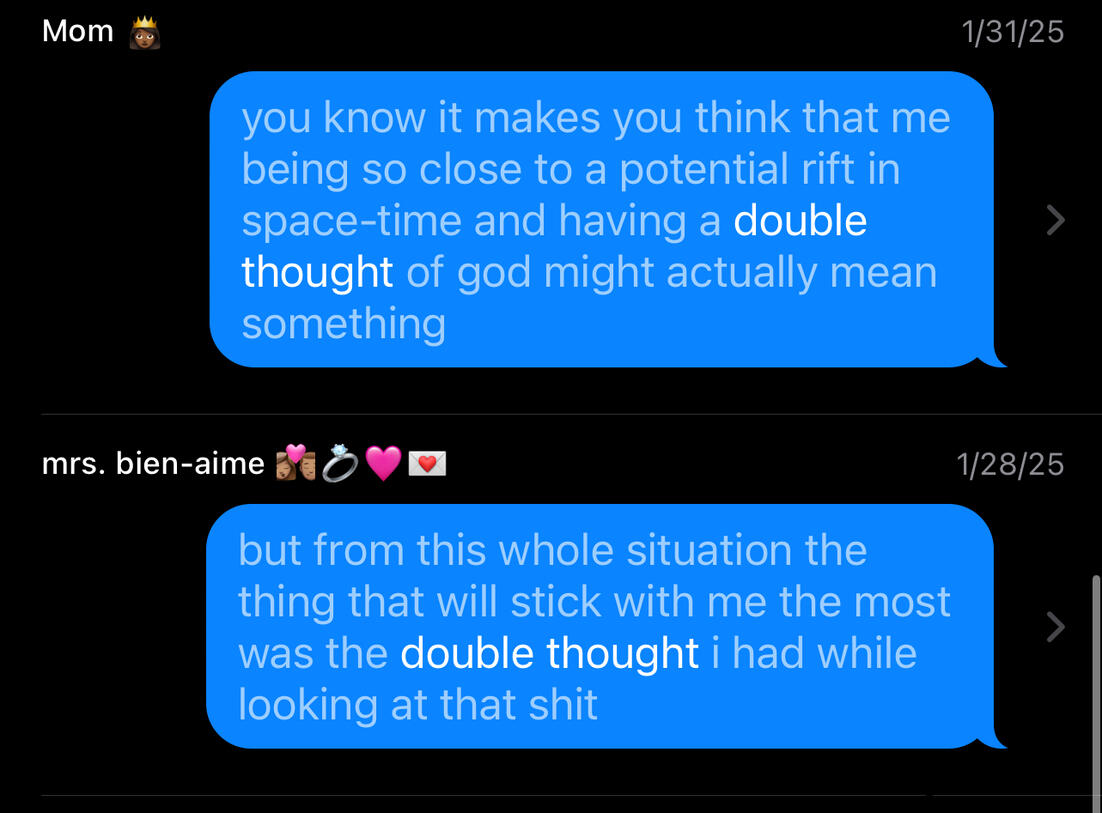

Double Thought

While I was observing the drone, I asked myself, "What exactly am I looking at?" A moment later, as I tried to understand what I was observing, an intrusive and overlapping thought suddenly emerged in my mind and said, "God." I paused in confusion for a second; I knew God did not look like a baby alien with two red lights, so I was confused about how this thought arose. What’s strange about this thought is that it overlapped with my current train of thought, causing me to think two different things at once.

Memory Gap

As I mentioned in the pre-recording flight path recap, there is one section of the encounter that I can not recall at all. I can not remember how the drone went from being positioned slightly below window level near the middle of the intersection to the right of me, to being positioned directly in front of me on the opposite sidewalk just a few inches above the ground. My memory of the flight was also spotty, and I initially kept recalling the drone's flight movements in an incorrect order.

Double Memory

As I was first describing the drone's exact flight to my friend, I mistakenly said that the drone was just a few inches in front of me when it was performing its vertical 90-degree movement as it was positioned in front of me. I told him that the drone was in front of the trees on our side of the street, rather than in its correct positioning in front of the trees across the street. As I tried to recall this 90-degree movement, an intrusive vision of the drone being directly in front of me kept overriding my memory. Once again, this 90-degree climb happened immediately after the only moment during the event when my memory is completely blank. You could attribute this to being a normal PTSD response, as recalling PTSD triggers being closer than they were is a typical response, until you consider how I was overcome with only positive emotions and experienced no feelings of trauma, even as I was experiencing this warped recollection when I was describing the sighting to my friend. I suppose one could argue that this trauma was neurological rather than emotional. However, the memory gap immediately preceding this double memory, and the positioning of the drone during this period raise questions, especially when you consider that this drone movement was presumably unnecessary if there was not an unintended witness, as it extended the time of their mission. When I learned that this “alien” was actually a drone, I initially assumed that the operator repositioning the drone directly in front of me was somehow necessary for the drone's flight. Given these memory encoding abnormalities, I now know that the reasoning for this movement was far more sinister than just a drone readjustment and was a way to obstruct the memory or induce a neurological effect on any unintended observer.

Sidenote: Here I am thinking I was forming a bond with a baby alien, when I was actually attacked by a Chinese anti-gravity weapon of mass destruction. Pretty funny in my opinion.

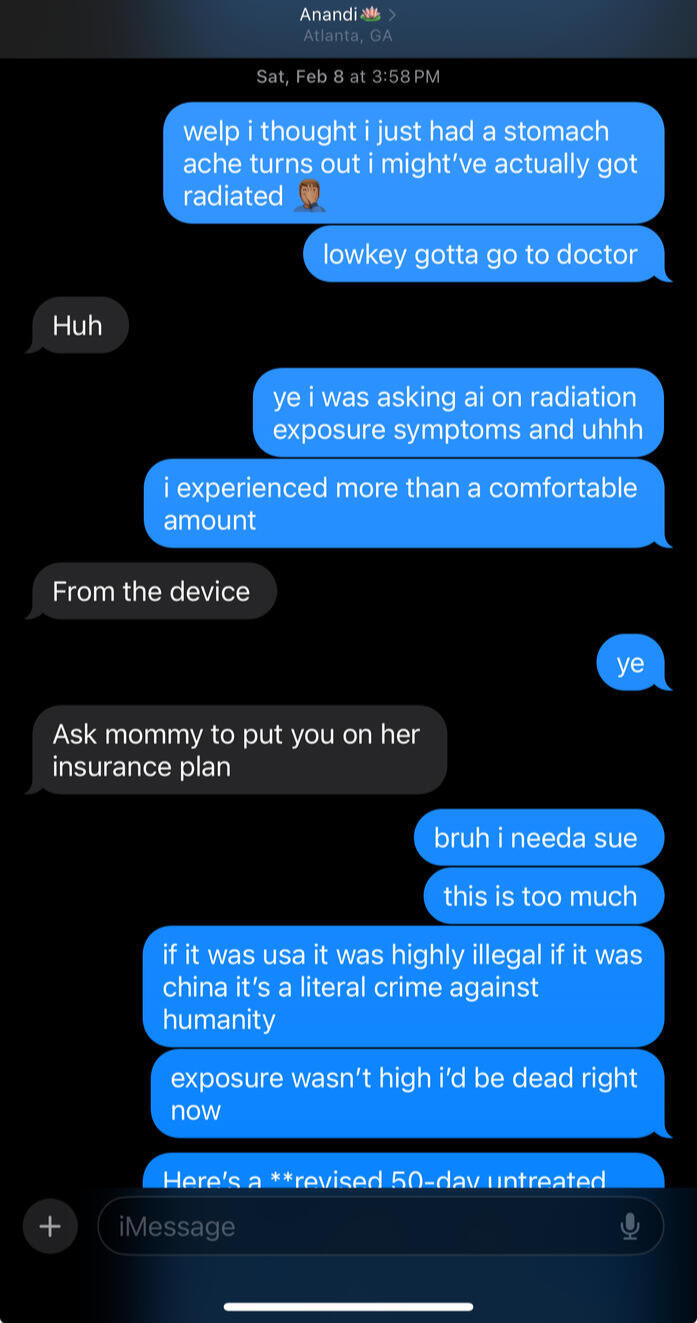

Headache & Stomach Ache

The next day, I had a headache that developed in the afternoon and lasted the entire day. Throughout my life, I’ve had maybe only two headaches, leaving the only logical conclusion to be that I was experiencing a common side effect of exposure to radiation or a high-intensity electromagnetic field. I also took some Aleve that day and it was completely ineffective. That same day, I also developed a stomachache and diarrhea for the next few days (TMI, I’m sorry).

Skin Redness

For the first couple of days after the sighting, every time I scratched my skin, it would become noticeably red. I only remember this redness occurring on the upper part of my chest, although it might have been present when I scratched other parts of my body.

Watery Eyes

For the next few days, I noticed my pillow was unusually wet every time I woke up. I also had to scratch my eyes a lot as they were frequently itchy.

Cold Resistance

For the next few days, every time I walked outside, it didn't feel cold despite the weather saying otherwise.

Increased Vivid Dreams

Since witnessing the drone, I’ve been having very vivid dreams, maybe 4 to 5 times a week, and often multiple times per night. Throughout my life, remembering a dream was rare. Typically, I’d only remember maybe one a month. Since the drone, it now also only takes me about 15 to 30 minutes after falling asleep to enter REM mode and start dreaming. Since the event, most of the dreams have unfortunately been nightmares. Increased vivid dreams are a common symptom once you quit smoking weed; however, during this time I was still smoking at the same rate as before the drone.

Constant Thoughts

For about the first week after the drone sighting, the drone was all I could think about. I spent almost every waking minute thinking about the drone and its implications. My roommate eventually went back to his girlfriend’s house after being subjected to nonstop, 5+ hour-long rants about what just happened. These thoughts were always positive, and I never panicked or felt fear. After the 60-hour-long Secret Service stingray across the street from me departed, I attempted to open my computer to resume my bank fraud operation. Every attempt failed as I couldn’t hold my focus for more than a few minutes. I could think about nothing except the drone, philosophy, theories about the universe, and what was currently happening inside both governments with the drone situation. I even told my mother, “I think the drone took over my brain,” when she asked me to stop talking about the drone. Honestly, this hasn’t stopped, as this event is the only thing I focus on for 90% of the week, but it has definitely calmed down a lot. These thoughts are always positive, and I never panicked or felt uneasy despite the gravity of the situation. I was only slightly paranoid the night of the sighting, but once I did not get suicided the day after the attack, I understood my life likely was not in danger, and the slight paranoia vanished. The night of the attack, the excitement also far outweighed the paranoia, so I was mostly at peace.

Fatigue

I was noticeably more tired for the next week or so after the drone. I felt unusually sluggish for the entire week.

iPhone Camera

Not a biological effect, but my sensor showed clear signs of damage after the drone attack. It's still damaged, but these effects are most visible in the videos above and in the videos I recorded of the Secret Service event. Honestly, I don't have a scientific explanation for this sensor damage, just know that every video I have before this event does not show these anomalies. I'm sure a CMOS technician or physicist can provide a detailed explanation, but at this point, if you don't believe me, a sensor damage explanation will likely do nothing. Honestly, if you still don't believe me at this point, how much more proof do you need? Download the drone video, read Matthew Livelsberger's message, read my testimony, search basic anti-gravity technology principles, use common sense, and don't listen to your government.

Delayed Effects

Visual Snow Syndrome

A few weeks after the event, I noticed tiny sparks of light in my vision. Initially, I believed I was witnessing glimpses of other dimensions or something, but it turned out to just be visual snow syndrome. Additionally, when I look at a blue sky, I notice a bunch of dancing white floaters in my field of vision. I also see more frequent and more pronounced halos around light now.

Abdominal Pain

Around the time I noticed the visual snow syndrome, I started having instances of stomach pain and frequent foul-smelling farts. By foul-smelling, I mean like the worst ever; they lingered for like 5 minutes too. This lasted for a little over a month. (TMI again, my bad, it’s for science).

Rapid Healing

At the beginning of March, my dog cut me a few times, and each cut healed within less than two hours and faded into light red spots. This happened on three separate occasions. This rapid healing response faded a few weeks later.

Fatigue Pt.2

Around the same time as the rapid healing, I noticed that I was, once again, considerably more tired throughout the day. Shortly after my first meal of the day, which is usually around 11 a.m., I was always extremely tired and had to take a nap. This stopped around July.

Skin Shedding

Around day 70, the skin on my hands started to peel as shown above. This occurred on both hands and was not painful. I do not have any skin conditions that could cause this. This lasted for about two weeks.

Bilateral "Spirit Gate" & "Green Spirit" Skin Lesions

On day 130, I developed hyperpigmentation and cracked skin on both wrists in line with my pinky fingers. I do not have eczema or any skin condition that could cause this. What is interesting about this is that it’s located directly at the Heart 7 acupuncture point, also known as the “Spirit Gate.” In traditional Chinese medicine, this point symbolizes where humans’ physical bodies connect with their energetic bodies. As I type this on May 31st, the discoloration is still present, with my right hand—the hand I recorded the drone with—showing the highest amount of cracking and hyperpigmentation. The cracking will fade sometimes during the day, but it comes back after every shower. It turns out bilateral skin lesions are a documented symptom after UAP sightings. On day 150, I developed a tender purple bruise at my HT2 acupuncture point. In TCM, this point is known as the "Green Spirit" or "Youthful Spirit." It is now June 25th, and the cracking is gone, yet the hyperpigmentation is still present.

Enhanced Eye Control

Along with the visual snow syndrome, I noticed another change in my vision. I could now expand lights and make them bloom by flexing my eye muscles. I was also now able to create a split, eye-dependent copy of objects in my field of view. I’m now able to control the distance between these two split images and divert my focus entirely to one light while keeping the other one active. If I rotate my head, the lights rotate relative to the angle of my head’s rotation. The first time I noticed this light-expanding ability, I was testing it on a star in the sky. As I was staring at the star, diverting all of my attention to observing this newfound ability, the buildings and trees around me lost their color and faded to a black shadow but kept their geometrical outline. I was able to shift my focus onto these buildings, and their outline remained completely black.

Analyze 9 seconds of truthoraccept a lifetime of lies

The truth always carries the ambiguity of the words used to express it. That is why I’m providing you with access to the original video file to analyze everything yourself! Once again, I encourage you to conduct your own research to arrive at your own conclusion. If you doubt me, that is perfectly fine. There was even a time when I doubted myself, and I was the one 50 feet in front of the drone and inside its warp field. So I understand, but initial skepticism is not a valid reason to dismiss or deny. Perform your own analysis, analyze our testimonies, and you will uncover the truth.